Franklin Park Herald of Northlake has an article about Microsoft's Container Data Center being completed in first half of October.

Microsoft building slated for fall completion

July 23, 2008

Recommend

By MARK LAWTON mlawton@pioneerlocal.com

Construction on Microsoft's data center in Northlake is on schedule to tentatively be finished in the first half of October.

The 550,000-square-foot building at 601 Northwest Avenue will house thousands of servers used to control Microsoft's online services.

» Click to enlarge image

The Microsoft data center in Northlake will contain equipment that supplies power, water and networking capabilities.

The outer shell of the building is complete. A "significant portion" of the building electrical and mechanical work has also been completed, said Mike Manos, general manager of data center services.

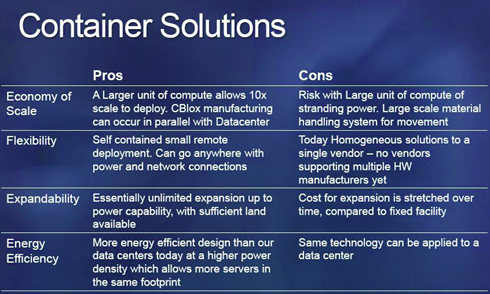

The building will be a "container data center" and constructed in a manner that resembles an erector set or Lego toys.

"Literally, a truck will put up with a container on its back," Manos said. "The container has the computer equipment and cooling and electrical systems. It plugs into what we refer to as the spine, which gives it power and water (for cooling the equipment) and networking capabilities. Then the truck pulls away."

When the electrical and mechanical work is done, Microsoft will start the commissioning process. That's when they start testing out all the computer network servers -- "tens to hundreds of thousands," Manos said.

That process will take one to two months. Then the center will start being used.

Microsoft's PDC (professional developer conference) is scheduled for Oct 28 -30 where we will see if rumors of Microsoft's cloud services are true.

Microsoft Mum On 'Red Dog' Cloud Computing

The Windows-compatible platform can be seen as Microsoft's counterpart Amazon's Elastic Cloud Computing service, known as EC2, and to the Google App Engine.

By Richard Martin

InformationWeek

July 23, 2008 12:00 PM

Attempting to respond to cloud computing initiatives from Google and Amazon (NSDQ: AMZN), Microsoft (NSDQ: MSFT) is apparently in the process of preparing a cloud-based platform for Windows that is codenamed "Red Dog."

Though reports of the project have been circulating in the blogosphere for months, Microsoft has not publicly described the service. Microsoft did not respond to repeated requests for comments for this story.

Providing developers with flexible, pay-as-you-go storage and hosting resources on Microsoft infrastructure, Red Dog can be seen as Microsoft's counterpart Amazon's Elastic Cloud Computing service, known as EC2, and to the Google App Engine, according to Reuven Cohen, founder and chief technologist for Toronto-based Enomaly, which offers its own open-source cloud platform.

"It seems that Microsoft is working on a project codenamed 'Red Dog' which is said to be an 'EC2 for Windows' cloud offering," Cohen wrote on his blog, Elastic Vapor, last week. "The details are sketchy, but the word on the street is that it will launch in October during Microsoft's PDC2008 developers conference in Los Angeles."

Convenient Timing? Or planned execution?