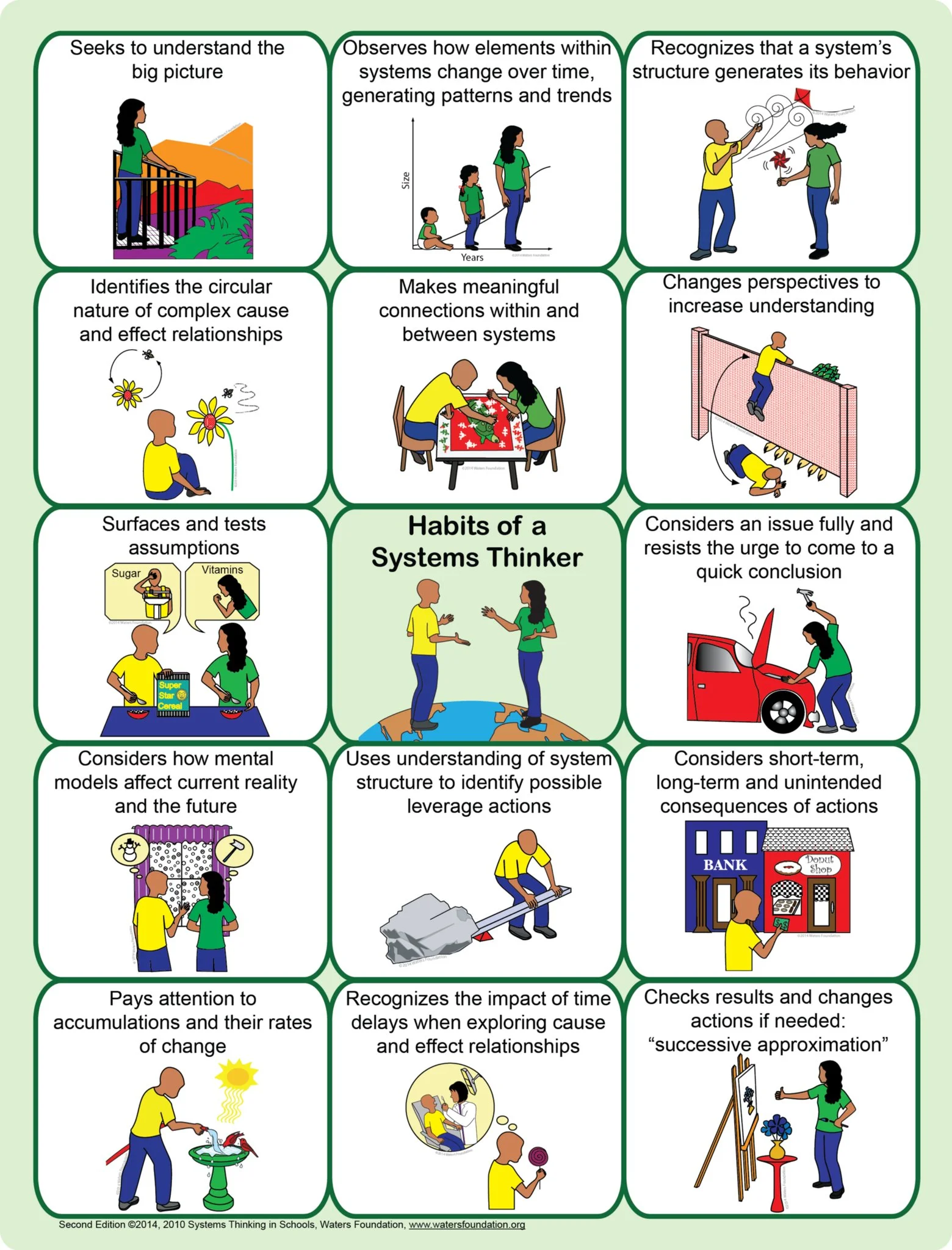

I saw this graphic on a twitter post. If you like it you buy a poster on the Waters Foundation web site.

So many of my friends think this way and it is to see a well done graphic to illustrate a system approach.

Your Custom Text Here

I saw this graphic on a twitter post. If you like it you buy a poster on the Waters Foundation web site.

So many of my friends think this way and it is to see a well done graphic to illustrate a system approach.

Google’s Urs Hölzle posted on Google placing its largest server order in its history 15 years ago.

15 years ago we placed the largest server offer in our history: 1680 servers, packed into the now infamous "corkboard" racks that packed four small motherboards onto a single tray. (You can see some preserved racks at Google in Building 43, at the Computer History Museum in Mountain View, and at the American Museum of Natural History in DC,http://americanhistory.si.edu/press/fact-sheets/google-corkboard-server-1999.)

At the time of the order, we had a grand total of 112 servers so 1680 was a huge step. But by the summer, these racks were running search for millions of users. In retrospect the design of the racks wasn't optimized for reliability and serviceability, but given that we only had two weeks to design them, and not much money to spend, things worked out fine.

I read this thinking how impactful was this large server order. Couldn’t figure what I would post on how the order is significant.

Then I ran into this post on Site Reliability Engineering dated Apr 28, 2014, and realized there was a huge impact by Google starting the idea of a site reliability engineering team.

Here is one the insights shared.

The solution that we have in SRE -- and it's worked extremely well -- is an error budget. An error budget stems from this basic observation: 100% is the wrong reliability target for basically everything. Perhaps a pacemaker is a good exception! But, in general, for any software service or system you can think of, 100% is not the right reliability target because no user can tell the difference between a system being 100% available and, let's say, 99.999% available. Because typically there are so many other things that sit in between the user and the software service that you're running that the marginal difference is lost in the noise of everything else that can go wrong.If 100% is the wrong reliability target for a system, what, then, is the right reliability target for the system? I propose that's a product question. It's not a technical question at all. It's a question of what will the users be happy with, given how much they're paying, whether it's direct or indirect, and what their alternatives are.The business or the product must establish what the availability target is for the system. Once you've done that, one minus the availability target is what we call the error budget; if it's 99.99% available, that means that it's 0.01% unavailable. Now we are allowed to have .01% unavailability and this is a budget. We can spend it on anything we want, as long as we don't overspend it.

Here is another rule that is good to think about when running operations.

One of the things we measure in the quarterly service reviews (discussed earlier), is what the environment of the SREs is like. Regardless of what they say, how happy they are, whether they like their development counterparts and so on, the key thing is to actually measure where their time is going. This is important for two reasons. One, because you want to detect as soon as possible when teams have gotten to the point where they're spending most of their time on operations work. You have to stop it at that point and correct it, because every Google service is growing, and, typically, they are all growing faster than the head count is growing. So anything that scales headcount linearly with the size of the service will fail. If you're spending most of your time on operations, that situation does not self-correct! You eventually get the crisis where you're now spending all of your time on operations and it's still not enough, and then the service either goes down or has another major problem.

WSJ has an article on companies buying from Amazon Web Services instead of from a server vendor.

The Lafayette, La., company, has been shifting a growing proportion of its computing chores to computers operated by Amazon.com Inc. AMZN +0.65% In the past year, Schumacher purchased just one server from Hewlett-Packard Co., HPQ -0.04%says Douglas Menefee, Schumacher's chief information officer.

Five years ago, the company may have bought 50 such servers for as much as $12,000 apiece. "We don't really buy hardware anymore," says Mr. Menefee.

End users are dumping the old way of buying IT hardware then attempting the integration in-house or with IT services. Users in the past were so happy it worked whether it was efficient and effective was many times not an issue. Remember when you had a performance problem and the answer was to upgrade or buy more hardware?

Now end user want IT services that have been marketed by amazon.com, google, microsoft, salesforce, and others. Enterprises want Big Data environments and high performance compute (HPC) solutions. Here is an example of one solution for those who want to buy IT gear, but want an integrated deployed solution.